학교 졸업작품으로 컴퓨터비전 기반 Lego sorting machine을 만들게 되었다.

컨베이어 벨트위에 있는 Lego를 Detect하는 부분은 OpenCV로 개발하였고

OpenCV가 전달해준 Lego Image를 어떤 블럭인지 Classification하는 모델을 만들어보았다.

모델 개요

88x88 그레이스케일 입력을 받아서 Prediction 결과로 총 11개의 Lego block중 1개를 출력한다.

loss 함수 : categorical_crossentropy

optimizer : Adam

사용된 학습관련 스킬

- 배치 정규화

- 가중치 초기화(he_normal)

- 1x1 convolution

- Dropout Layer

모델 소스코드

In [1]:

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

%matplotlib inline

from tensorflow.keras.layers import Input, Activation, Dense, Flatten, RepeatVector, Reshape

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Dropout, BatchNormalization

from tensorflow.keras.models import Model

from tensorflow.keras import Sequential

from tensorflow.keras import optimizers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import cv2

from tensorflow.keras.callbacks import EarlyStopping, TensorBoard

import time

from datetime import datetime

In [2]:

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'dataset/train',

target_size=(88, 88),

batch_size=70,

color_mode="grayscale",

class_mode='categorical')

test_datagen = ImageDataGenerator(rescale=1./255)

test_generator = test_datagen.flow_from_directory(

'dataset/valid',

target_size=(88, 88),

batch_size=80,

color_mode="grayscale",

class_mode='categorical')

In [3]:

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3), input_shape=(88,88,1), kernel_initializer='he_normal'))

model.add(Conv2D(filters = 32, kernel_size = (1,1), strides = (1,1), padding = 'valid', kernel_initializer='he_normal'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(32, kernel_size=(3, 3), input_shape=(88,88,1), kernel_initializer='he_normal'))

model.add(Conv2D(filters = 32, kernel_size = (1,1), strides = (1,1), padding = 'valid', kernel_initializer='he_normal'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.7))

model.add(Conv2D(64, kernel_size=(3, 3), input_shape=(88,88,1), kernel_initializer='he_normal'))

model.add(Conv2D(filters = 64, kernel_size = (1,1), strides = (1,1), padding = 'valid', kernel_initializer='he_normal'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(64, kernel_size=(3, 3), input_shape=(88,88,1), kernel_initializer='he_normal'))

model.add(Conv2D(filters = 64, kernel_size = (1,1), strides = (1,1), padding = 'valid', kernel_initializer='he_normal'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(256, kernel_initializer='he_normal'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Dropout(0.7))

model.add(Dense(11, activation='softmax', kernel_initializer='he_normal'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.summary()

In [4]:

early_stopping = EarlyStopping(monitor='val_loss',mode='min', patience=10)

logdir="logs/" + datetime.now().strftime("%Y%m%d-%H%M%S")

tensorboard = tf.keras.callbacks.TensorBoard(log_dir=logdir)

In [5]:

model.fit_generator(

train_generator,

steps_per_epoch=1350,

epochs=20,

validation_data=test_generator,

validation_steps=235,

callbacks=[early_stopping, tensorboard])

Out[5]:

In [11]:

print("-- Evaluate --")

scores = model.evaluate_generator(test_generator, steps=5)

print("%s: %.2f%%" %(model.metrics_names[1], scores[1]*100))

In [12]:

print("-- Predict --")

output = model.predict_generator(test_generator, steps=5)

np.set_printoptions(formatter={'float': lambda x: "{0:0.3f}".format(x)})

print(test_generator.class_indices)

print(output)

In [13]:

model.save("lego_sorter_v7.h5")

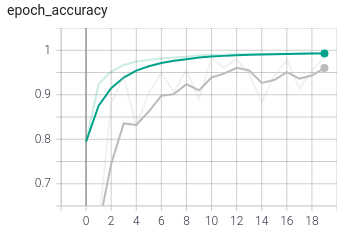

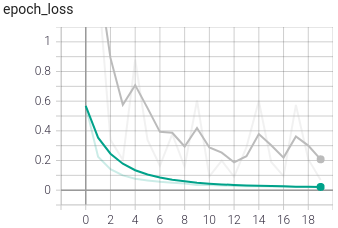

모델 Train 결과